|

1 |

WARNING: An NVIDIA kernel module 'nvidia-uvm' appears to be already loaded in your kernel. |

|

1 |

lsof -w /dev/nvidia* |awk '{print $2}'|xargs kill -9 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

nvidia-smi Sat Feb 22 12:00:29 2025 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 560.28.03 Driver Version: 560.28.03 CUDA Version: 12.6 | |-----------------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 NVIDIA H20 Off | 00000000:0F:00.0 Off | 0 | | N/A 31C P0 113W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 1 NVIDIA H20 Off | 00000000:34:00.0 Off | 0 | | N/A 29C P0 111W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 2 NVIDIA H20 Off | 00000000:48:00.0 Off | 0 | | N/A 32C P0 116W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 3 NVIDIA H20 Off | 00000000:5A:00.0 Off | 0 | | N/A 30C P0 111W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 4 NVIDIA H20 Off | 00000000:87:00.0 Off | 0 | | N/A 31C P0 117W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 5 NVIDIA H20 Off | 00000000:AE:00.0 Off | 0 | | N/A 28C P0 111W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 6 NVIDIA H20 Off | 00000000:C2:00.0 Off | 0 | | N/A 31C P0 114W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ | 7 NVIDIA H20 Off | 00000000:D7:00.0 Off | 0 | | N/A 29C P0 116W / 500W | 1MiB / 97871MiB | 0% Default | | | | Disabled | +-----------------------------------------+------------------------+----------------------+ +-----------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=========================================================================================| | No running processes found | +-----------------------------------------------------------------------------------------+ |

问题来了, gpu-opearator 下 nvidia-cuda-validator-**反复重启

文章评论

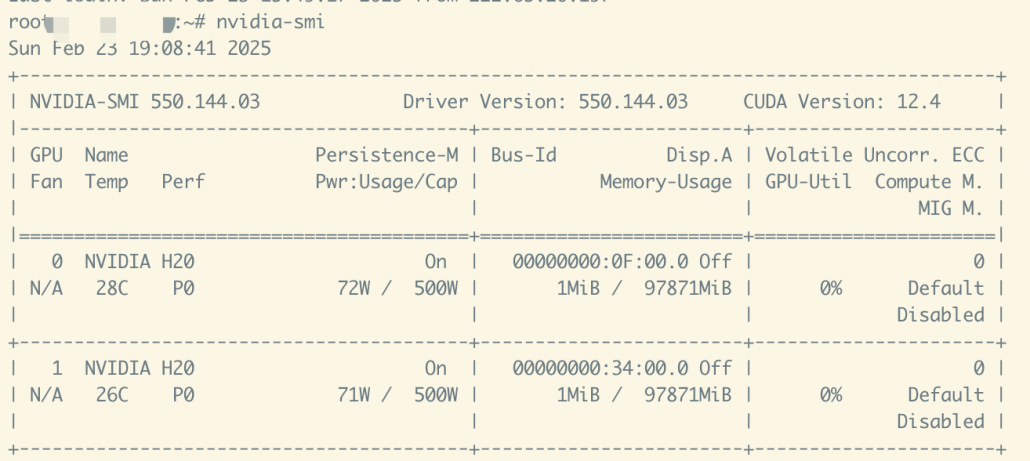

看文章前面写的cuda 12.4不支持h20的,怎么以后又选择了12.4版本呢?

@bing 当时应该是cuda 12.6 不兼容,有一个版本对应关系