背景:

最近接了个POC任务,其中一项是用ClusterLoader2对K8s集群进行压测。本以为是个"常规项目",结果这玩意儿前前后后折腾了我2周!昨晚终于跑通,现在趁热纪录,给自己和新手避坑~

🚀 极简操作指南

克隆代码(版本要对!)

|

1 |

git clone -b release-1.31 https://github.com/kubernetes/perf-tests.git |

本次k8s 环境1.31 , 因此直接clone 这个版本分支

终极启动命令

|

1 |

./clusterloader --masterip=10.29.229.221 --testconfig=config.yaml --provider=local --provider-configs=ROOT_KUBECONFIG=/root/kubeconfig4clusterloader --kubeconfig=/root/kubeconfig4clusterloader --v=5 --enable-prometheus-server=false --nodes=2 2>&1 | tee output.txt |

用到的config.yaml 测试配置文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 |

name: nginx-pod-latency-test namespace: number: 1 tuningSets: - name: Uniform5qps globalQPSLoad: qps: 10 burst: 10 steps: - module: path: /perf-tests/clusterloader2/testing/load/modules/pod-startup-latency.yaml params: namespaces: 1 minPodsInSmallCluster: 10 image: registry.cn-shanghai.aliyuncs.com/my2021/2048:motd resourceLimits: - name: cpu limit: 1 - name: memory limit: 1Gi - module: path: /perf-tests/clusterloader2/testing/load/modules/reconcile-objects.yaml params: actionName: "create" namespaces: 1 tuningSet: Uniform5qps testMaxReplicaFactor: 1.0 operationTimeout: "5m" deploymentImage: "registry.cn-shanghai.aliyuncs.com/my2021/2048:motd" daemonSetImage: "registry.cn-shanghai.aliyuncs.com/my2021/2048:motd" bigDeploymentSize: 10 bigDeploymentsPerNamespace: 1 mediumDeploymentSize: 0 mediumDeploymentsPerNamespace: 0 smallDeploymentSize: 0 smallDeploymentsPerNamespace: 0 smallStatefulSetSize: 0 smallStatefulSetsPerNamespace: 0 mediumStatefulSetSize: 0 mediumStatefulSetsPerNamespace: 0 smallJobSize: 0 smallJobsPerNamespace: 0 mediumJobSize: 0 mediumJobsPerNamespace: 0 bigJobSize: 0 bigJobsPerNamespace: 0 daemonSetReplicas: 0 CL2_CHECK_IF_PODS_ARE_UPDATED: "true" CL2_DISABLE_DAEMONSETS: "true" CL2_ENABLE_PVS: "false" - module: path: /perf-tests/clusterloader2/testing/load/modules/reconcile-objects.yaml params: actionName: "delete" namespaces: 1 tuningSet: Uniform5qps testMaxReplicaFactor: 0.0 operationTimeout: "5m" bigDeploymentSize: 10 bigDeploymentsPerNamespace: 0 mediumDeploymentSize: 0 mediumDeploymentsPerNamespace: 0 smallDeploymentSize: 0 smallDeploymentsPerNamespace: 0 smallStatefulSetSize: 0 smallStatefulSetsPerNamespace: 0 mediumStatefulSetSize: 0 mediumStatefulSetsPerNamespace: 0 smallJobSize: 0 smallJobsPerNamespace: 0 mediumJobSize: 0 mediumJobsPerNamespace: 0 bigJobSize: 0 bigJobsPerNamespace: 0 daemonSetReplicas: 0 CL2_CHECK_IF_PODS_ARE_UPDATED: "true" CL2_DISABLE_DAEMONSETS: "true" CL2_ENABLE_PVS: "false" |

💥 踩过的深坑

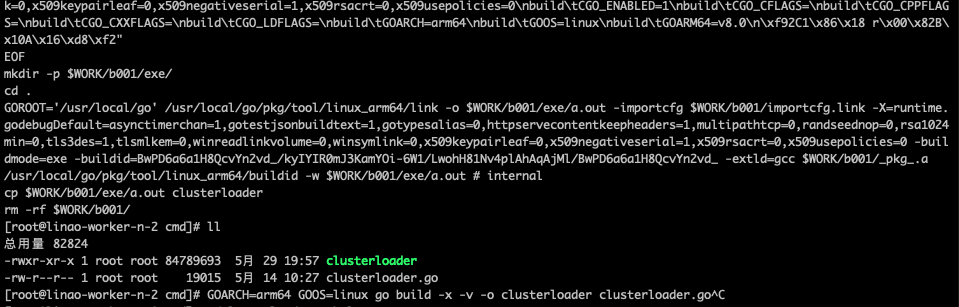

第一个坑,构建二进制文件失败

go build -x -v -o clusterloader clusterloader.go

提示版本报错

go: errors parsing go.mod:

/root/perf-tests/clusterloader2/go.mod:4: invalid go version '1.22.4': must match format 1.23

按照提示,改下即可继续. 同事告诉我go 1.24.3版本构建无需修改这个文件, 升级了节点的go环境,再跑果然正常了。

安装go 1.24版本 的2种操作

|

1 2 3 4 5 6 7 8 9 |

sudo add-apt-repository ppa:longsleep/golang-backports sudo apt install golang-1.24 echo 'export PATH=/usr/lib/go-1.24/bin:$PATH' >> ~/.profile sed -i 's|export PATH=$PATH:/usr/lib/go-1.24/bin|export PATH=/usr/lib/go-1.24/bin:$PATH|' ~/.profile source ~/.profile which go go env GOROOT cd perf-tests/clusterloader2/cmd/ GOARCH=amd64 GOOS=linux go build -x -v -o clusterloader clusterloader.go |

小结:Go版本≥1.24才能丝滑构建

第3个坑:路径玄学

-

灵异现象:配置文件死活找不到

配置的config.yaml 文件,里面的引用文件居然不能用绝对路径,只能相对它的路径,如下这种格式

- module:

path: /perf-tests/clusterloader2/testing/load/modules/reconcile-objects.yaml

第3个坑 prometheus

参数启用了--enable-prometheus-server=true 时,

会创建一个monitor的ns,和几个pod,以及 名为ssd的sc

kubectl get sc |grep ssd

ssd kubernetes.io/gce-pd Delete Immediate false 125m

解决办法自然是不用prometheus ,这里的值改成fasle,或者直接去掉。

调参相关

1, 默认延时问题

这里的值5秒,虚拟环境还真拉不起一个pod,改长点,后来发现物理机可以1-3秒全部跑完,不用改。

{{$podStartupLatencyThreshold := DefaultParam .CL2_POD_STARTUP_LATENCY_THRESHOLD "5s"}} #改到50s

/perf-tests/clusterloader2/testing/load/simple-deployment.yaml

2, configmap和secrets问题

deployment.yaml ,里面调用了 configmap和secrets ,导致big 测试时过不去,注释掉这2个相关设置清净了。

🎉 胜利的曙光

成功压测结果类似如下

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

...... I0529 20:08:37.024845 1474234 simple_test_executor.go:87] StatelessPodStartupLatency_PodStartupLatency: { "version": "1.0", "dataItems": [ { "data": { "Perc50": 32737.558438, "Perc90": 44789.393112, "Perc99": 48925.585207 }, "unit": "ms", "labels": { "Metric": "schedule_to_watch" } }, { "data": { "Perc50": 33592.923438, "Perc90": 44913.893112, "Perc99": 50046.076207 }, "unit": "ms", "labels": { "Metric": "pod_startup" } }, ...... I0529 20:08:37.027944 1474234 reflector.go:319] Stopping reflector apps/v1, Resource=deployments (0s) from pkg/mod/k8s.io/client-go@v0.32.3/tools/cache/reflector.go:251 I0529 20:08:47.083242 1474234 simple_test_executor.go:402] Resources cleanup time: 10.058361346s I0529 20:08:47.083302 1474234 clusterloader.go:252] -------------------------------------------------------------------------------- I0529 20:08:47.083309 1474234 clusterloader.go:253] Test Finished I0529 20:08:47.083314 1474234 clusterloader.go:254] Test: config-5.yaml I0529 20:08:47.083320 1474234 clusterloader.go:255] Status: Success I0529 20:08:47.083326 1474234 clusterloader.go:259] -------------------------------------------------------------------------------- JUnit report was created: /root/junit.xml I0529 20:08:47.086100 1474234 prometheus.go:331] Get snapshot from Prometheus |

补充说明,最终在物理机上测试,平均1-3秒搞定。

参考资料

k8s部署zabbix 6.0并添加监控(监控k8s资源)

https://bingerambo.com/posts/2020/12/k8s集群性能测试-clusterloader/

K8s集群性能测试

文章评论